Almost an year ago, we covered the then newly introduced cmdlet to fetch data out of Microsoft’s 365 Activity Explorer: Export-ActivityExplorerData. Now, Microsoft is introducing a similar cmdlet for the Content explorer tool, namely Export-ContentExplorerData. In this article, we will take a look at how the cmdlet works, but first, a brief refresher on what the Content explorer is all about.

The Content explorer tool can be accessed under the Data Classification section of the Compliance center. In a nutshell, its purpose is to give you an overview of all items labeled with either a sensitivity and/or retention labels, or items that match one or more Sensitivity information types and Trainable classifiers. Apart from the breakdown of “type”, you can get a list of items per workload, down to the individual folders containing the item. For each item, you can get additional metadata, such as Document ID or the confidence level, and the option to preview the actual item. As the tool obviously exposes some very sensitive items, it’s important to properly restrict access to it. Lastly, the tool also offers an Export functionality, which allows you to obtain a CSV listing all items matching the current selection. The export feature leaves a lot to be desired, as it only shows file names and the matched SITs/labels. No additional metadata is exposed, not even the location of the item (i.e. which ODFB/Site it is located in, which folder, etc).

Enter the Export-ContentExplorerData cmdlet. Now available in public preview as part of the Security and Compliance Center PowerShell cmdlets, Export-ContentExplorerData allows you to generate a list of all items matching a given label or SIT, with optional parameters allowing you to scope down the export to specific workloads and even down to individual sites/mailboxes. The supported parameters include:

- TagType – defines the “type” of matched tag to include in the output, with available choices of: Retention, Sensitivity, SensitiveInformationType and TrainableClassifier. Mandatory.

- TagName – which specific label/SIT/classifier to include in the output. The choice here depends on the set of labels available within the tenant. Mandatory parameter, accepting a single value only.

- Workload – used to scope the export to specific workload only. Available values include: EXO, SPO, ODB, Teams, Exchange, OneDrive, SharePoint. Optional.

- UserPrincipalName – another optional “scoping” parameter, used to filter out only results within a given user’s mailbox and/or Teams chats.

- SiteUrl – similar to the above, but used to scope the export to items within a specific SPO/ODFB site.

- PageSize – specify the maximum number of entries to return. Optional parameter with default value of 100. Maximum allowed size for single page is 10000.

- PageCookie – if the number of results exceeds the maximum specified via the –PageSize parameter (or the 10k max), you can use this parameter to retrieve the next “page” of results. The corresponding “cookie” value is returned as part of the output (see below).

With the above in mind, let’s see few examples of the cmdlet in action. Do remember to connect to the SCC endpoint first, and of course, make sure you have the relevant permissions. The first example below lists all items containing the “KQL” retention label:

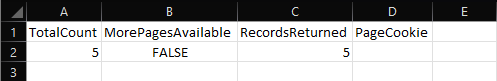

Export-ContentExplorerData -TagType Retention -TagName KQL TotalCount MorePagesAvailable RecordsReturned PageCookie ---------- ------------------ --------------- ---------- 10 False 6

As we can see from the output, a total of 10 items were found. Herein lies the first issue you might encounter with the cmdlet, as the set of results (RecordsReturned) might not represent what you see within the Compliance center. In my case, the cmdlet indicated that 10 items matching the specified retention label were found, yet it returned only 6 of them. Comparing the list with the set of results within the Compliance Center, we note that none of the results within Exchange Online were returned. In fact, if we scope the export down to just the Exchange workload, we get zero entries:

Export-ContentExplorerData -TagType Retention -TagName KQL -Workload Exchange TotalCount MorePagesAvailable RecordsReturned PageCookie ---------- ------------------ --------------- ---------- 4 False 0

Another annoyance is the way the cmdlet handles output. Unlike any other Office 365 cmdlet I’ve worked with, Export-ContentExplorerData returns a set of two different object types within its output. Of those the first one (of type Deserialized.Microsoft.Exchange.Management.LabelAnalytics.ContentExplorer.CmdletOutputDetail) returns the overall information about the query – the complete set of matches (TotalCount), the number of matches returned (RecordsReturned), whether additional pages are available (MorePagesAvailable) and if so, the corresponding “cookie” (PageCookie), to be used to retrieve the next set. If any matches are found, they are dumped within another object (of type Deserialized.Microsoft.Exchange.Management.LabelAnalytics.ContentExplorer.CmdletOutputFileItem). In effect, this makes it a bit more complicated than necessary to work with the results.

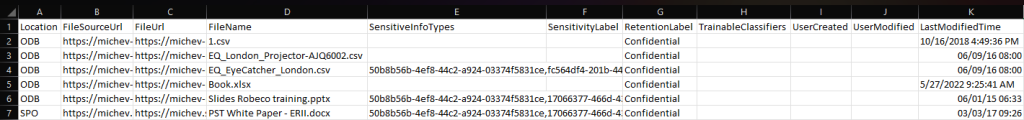

As both objects have a different set of properties, piping the output to the Export-CSV cmdlet just makes a big mess, as illustrated in the screenshot below. Not a good behavior for a cmdlet with the “Export” verb…

Export-ContentExplorerData -TagType Retention -TagName "Confidential" | Export-Csv D:\Downloads\blabla.csv -NTI

This is of course the expected behavior for such scenarios, as clearly stated in the official documentation for the Export-CSV cmdlet:

When you submit multiple objects to Export-CSV, Export-CSV organizes the file based on the properties of the first object that you submit. If the remaining objects do not have one of the specified properties, the property value of that object is null, as represented by two consecutive commas. If the remaining objects have additional properties, those property values are not included in the file.

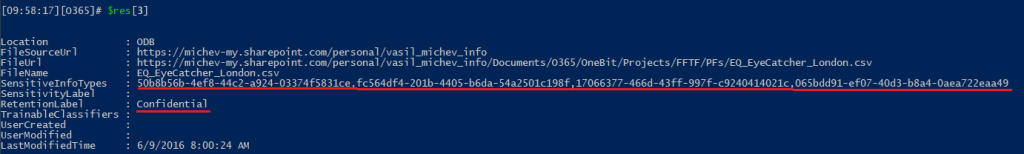

Now, if we only wanted to take a look at the output within the PowerShell console, we could pipe it against the Format-List cmdlet and use the –Force parameter to view both objects (i.e., add “| fl * -force” at the end of the line). You might however want to actually export the output to a CSV file, so how do we go about that?

My preferred method is to store the cmdlet output in a variable, say $res, then use $res[0] to get the statistics, and abuse the way PowerShell works with arrays to handle the actual output. As with any other array, we can get each individual item by using the $res[item_number] format, say $res[2] to retrieve the second item. Rinse and repeat until you get the last item (you can leverage $res[0].RecordsReturned to estimate the array’s size). Or get a set of items by range, i.e. $res[1-6]. As the array is of unspecified size, we can enter any arbitrary number for the upper bound, for example $res[1..10000]. This in turn should give us up to 10000 entries of the output (not counting the “statistics” one, $res[0]), or however many are actually available.

In other words, to facilitate the task of actually exporting the output of Export-ContentExplorerData to a CSV file, we can use something like this:

$res = Export-ContentExplorerData -TagType Retention -TagName "Confidential" $res[1..10000] | Export-Csv D:\Downloads\blabla.csv -NTI

Now this looks much more acceptable. And, in contrast with the CSV obtained via the UI export, it actually features some useful data, such as the full path to the item and the last modified timestamp.

To understand how the Export-ContentExplorerData cmdlet behaves with large set of results, we can use a simple trick – set the –PageSize value to 1. Now, the same query we used above will be split into multiple pages, each containing a single result. Each “page” in turn will be returned with the PageCookie value populated, which you can then use to retrieve the next set. The below example illustrates the process:

$res2 = Export-ContentExplorerData -TagType Retention -TagName KQL -PageSize 1 $res2[0].PageCookie jVJtb9owEP4v%2foybd0Ii7UMoW5V1aKxAN2mZKse%2bBIvERrZhRaj%2ffXboGJM2qafEuvOd757nsU%2fIHHeA8mCEOEM58imhITQNHidBiOOYjjGxLm4mCUsyO... Export-ContentExplorerData -TagType Retention -TagName KQL -PageSize 1 -PageCookie $res2[0].PageCookie

One can of course automate the process a bit:

$out = @()

$query = Export-ContentExplorerData -TagType Retention -TagName KQL -PageSize 1

while ($query[0].MorePagesAvailable) {

$out += $query[1..9999]

$query = Export-ContentExplorerData -TagType Retention -TagName KQL -PageSize 1 -PageCookie $query[0].PageCookie

}

$out += $query[1..9999]

$out

Of course in a real-life scenario you will not be using a –PageSize value of 1, but the above should work for any supported values. And if case you end up with empty $out value, the set of results should all be contained within the initial page, so use $query[1..10000] to get it.

Lastly, here are some examples on how to leverage the –UserPrincipalName and –SiteUrl parameters. The first example scopes down the search to just results within my own mailbox, whereas the second one searches my own ODFB site. In both cases, I must specify the –Workload paramter as well, otherwise the cmdlet will throw an error. And, in both cases only a single “location” can be searched, that is you cannot provide a set of mailboxes or sites to search.

Export-ContentExplorerData -TagType SensitiveInformationType -TagName "South Korea Driver's License Number" -UserPrincipalName vasil@michev.info -Workload ExO Export-ContentExplorerData -TagType SensitiveInformationType -TagName "South Korea Driver's License Number" -Workload ODB -SiteUrl "https://michev-my.sharepoint.com/personal/vasil_michev_info"

Some additional things need to be mentioned. There is no way currently to use the cmdlet to list all Tag names that are in use within the organization, so you will have to obtain this data by other means. This in turn makes is difficult to get the “overview” of the matches across all Tag types/names, which you can get at a single glance via the Content explorer. And, as an added annoyance, the cmdlet will happily accept any input for the –TagName parameter, without performing any validation. The resulting output will always contain 0 entries in such cases.

Sadly, most of the additional metadata exposed within the Content explorer tool seems to be unavailable in the cmdlet output. Another minor annoyance, there is a mix between tag names and GUIDs within the output:

One last annoyance, which is likely related to the Content explorer itself and not the cmdlet is that many of the Tag types fail to return results from Exchange. We already observed the discrepancy between TotalCount and RecordsReturned above, which at least in my experience, always seem to involve Exchange items. Specifically, only Sensitivity Information Types seem to ever return any ExO results, whereas none of the label types work. This might be some weird permissioning issue or limitation, as even for SITs I can only get results for my own mailbox and any other mailbox I have Full access permissions on. In any case, my attempts to resolve this by removing/readding all possible Content explorer related permissions and waiting for few days didn’t help.

In summary, while the Export-ContentExplorerData cmdlet is certainly a good addition, its currently implementation leaves a lot to be desired. The “export” part in particular is crippled by the funky choice of output, and I have a feeling that many people will have challenged getting to the actual data therein. Some additional improvements such as being able to fetch the full set of records or at least some numbers would be appreciated, and so will additional metadata for any of the matches.

I created a script to parse through all of this and append friendly names to SIT and Labels it finds on items but I wondered, is there a command which includes the confidence level on the matches it finds? right now I just get every SIT match and what other SIT’s matched on the same item as well as any applied labels.

Nope, it’s very light on the metadata 🙁

I need this for a customer case, but the cmdlet seems to ignore the -URL parameter, I always get results from the same site.

I can get this to work on some sites but on most I keep getting error “Cannot index into a null array”. Any ideas?

Likely zero entries matching the site, thus returning an empty array

I know the site has matching data and it will fail at different points every time I run it.

Hey Vasil,

Is it possible to do a top user count for a particular SIT?

I’d love to produce a report of the top users that have the most, say, credit card numbers across all workloads.

Should be possible via -TagType SensitiveInformationType -TagName “bla bla”, and then collating the results. Though I’m not sure how you’d handle SPO results, as the metadata generated doesn’t directly tell you the user’s involvement in a specific file.

When I try running this command it tells me the command is not enabled in my tenant.

I get the same error today

Clarification*

It stopped working on my side. Same permissions, same setup.

Should have something broken on MS side?

Same here, MS making some changes it seems. I’ll see if I can dig out some info…

mine started working – I had to remove the content list permission and re-add my permission, then all of the sudden it started working. The issue I face now is I have too much data being returned – I have a SIT that has over 7800 hits, and the command times out. Has anyone run into this?

I had this same issue, I have a script that expands everything in SIT matches to Excel and it just randomly stopped working, I found the ‘content list viewer’ role was reset and I was no longer in it. once I added myself it worked again.

Hi Vasil!

You are missing one line in the automation of data aggregation through pages (code coming after “One can of course automate the process a bit”). The issue is that you are either skipping appending data from an only page (when export fits on one page) or from the last page (when it does not).

Just add the following line after the while loop 🙂

$out += $query[1..9999]

I do mention this scenario below the example, but point taken 🙂

Great article. Very insightful. Thank you, Vasil!

Is it possible to export content explorer data for Sensitive information types with confidence level?

No, not currently at least.

Thank you, very informative.